Mastra finally found an AI code review tool their team can trust

Catches SQL injection vulnerabilities

Provides secure, context-aware fixes

Detects breaking changes during refactors

Flags incorrect API signatures

For Abhi Aiyer, the CTO of Mastra, maintaining code quality is mission-critical. Mastra is the leading TypeScript agent framework with over 300,000 weekly downloads, with companies like Softbank and Adobe using their agents in production.

They have a rapidly evolving codebase, a 16-person engineering team, and a user community that depends on the reliability of every release. With sweeping large-scale changes for the push to Mastra 1.0, the team needed a way to maintain high quality while shipping at the speed a leading AI framework in 2025 demands.

Before CodeRabbit, Mastra relied on manual reviews and tried several AI tools, though none fully earned the team’s trust. “The tools we evaluated provided a varied experience across engineers,” Abhi shared. “Some individuals trusted it, while others did not… I cannot use a tool with a 50% consistency rate.”

When Abhi learned that open source projects could use CodeRabbit for free, he tried it. After just one day of testing both tools side by side, the decision was obvious. “On that day, CodeRabbit clearly beat our other AI review tool. We canceled the next day.”

What started as a quick experiment has now become an integral part of Mastra’s development workflow.

Challenge: Keeping quality high across a fast-moving agent framework

Before CodeRabbit, Mastra’s engineers were still pushing a lot of code quickly. But in software engineering, every decision comes with a set of tradeoffs. And with the speed of those code changes, without a reliable review tool, came bugs.

Inconsistent code reviews across the team:

Because team members had different review styles, expectations were uneven. Some engineers skimmed. Others dug deep. Some caught issues while others were missed. It depended on the engineer, the day, the file, or the context.

An AI review tool that the team didn’t trust:

“We ship pretty quickly, and with that, there’s a lot of opportunity for mistakes along the way,” Abhi shared. Mastra needed a tool that was reliable and consistent. “Our other AI code review tool was not consistent for all team members,” Abhi said. With a high volume of PRs, those inconsistencies compounded.

The pressure of a major 1.0 release:

Ahead of the Mastra 1.0 cut, the team made a long list of breaking changes across the framework. This created fragility in the codebase, at a time when hidden issues would hurt the most. "Everyone was frustrated. number of comments they had to address revealed just how much unnecessary complexity had accumulated in the codebase over the past year." During this period, CodeRabbit became invaluable. Abhi admitted, "CodeRabbit’s feedback was difficult to process due to the sheer number of errors it identified, but it was necessary."

Why Mastra loves CodeRabbit

Catches critical issues that matter

Mastra’s engineers use AI-assisted development tools heavily and bugs would slip through, especially during big refactors or sweeping changes. CodeRabbit consistently surfaces real issues that humans miss.

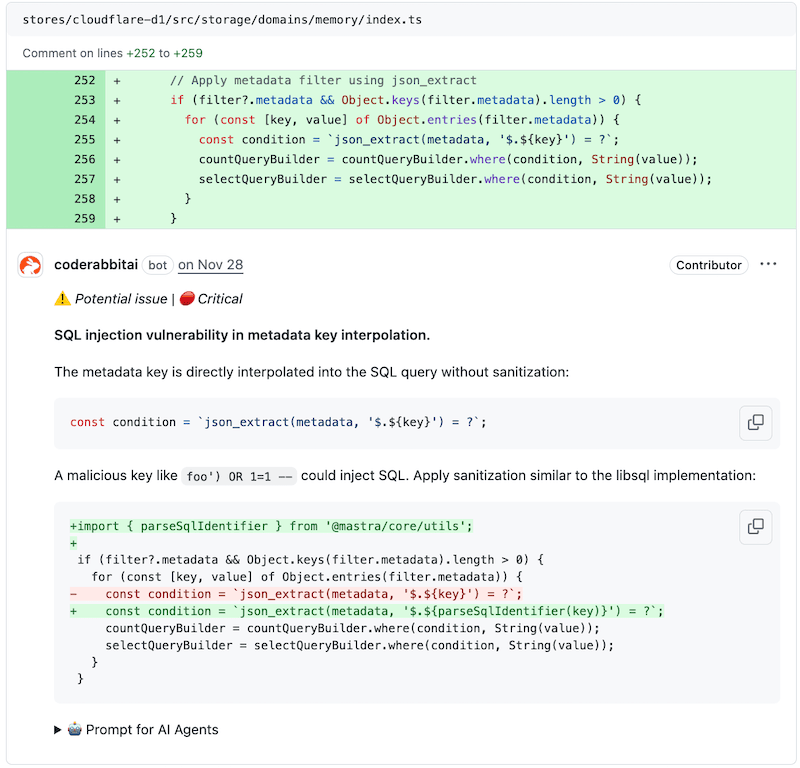

Example: Preventing a real SQL Injection risk

In one PR, CodeRabbit flagged a critical SQL injection vulnerability where unescaped metadata keys were being directly interpolated into a ClickHouse query. (See PR below)

It explained the risk clearly: “The vulnerability is real… A malicious key like foo') OR 1=1-- would break the query structure.” It then provided a secure rewritten fix using Mastra’s own parseSqlIdentifier utility, something a human reviewer might not have caught or remembered in the moment.

This immediate identification and suggested fix saved the engineering team significant time that would have otherwise been spent in manual security review, prevented a major security flaw from reaching production, and reinforced best practices for secure coding among the developers.

Check out Mastra PR

Investigates how the code works, not just what’s in the diff

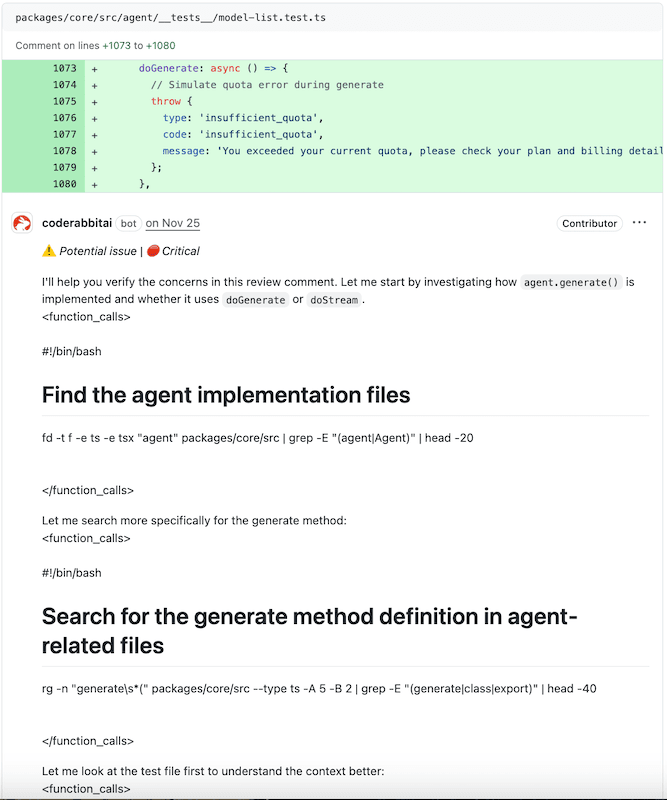

In another PR, CodeRabbit didn’t just review the lines shown. It performed a search across the repository to understand how agent.generate() and doGenerate were implemented.

It walked through:

- Locating agent files

- Identifying method definitions

- Reviewing test context

Comparing code paths

Check out Mastra PR

This focus on codebase context helps engineers understand impact across the entire framework, especially during large feature changes.

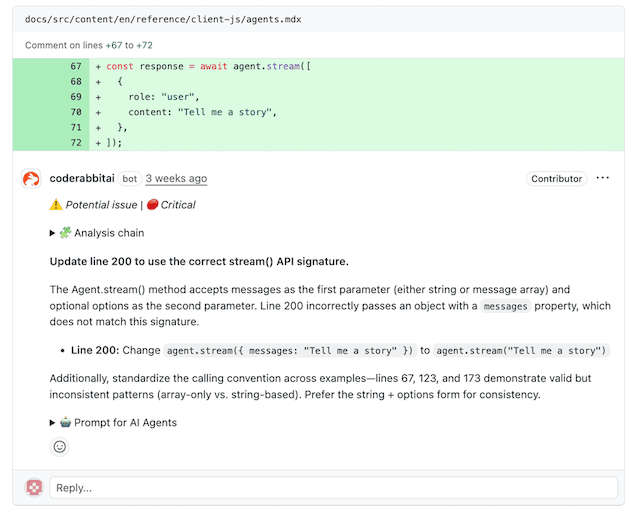

Fixes API signature misuse before it becomes a breaking issue

In documentation, CodeRabbit caught that the agent.stream() call was using an incorrect API signature. It clarified the proper usage and suggested a standardized calling convention: “Line 200 incorrectly passes an object with a messages property… prefer the string + options form for consistency.”

Check out Mastra PR

This prevents subtle inconsistencies that often trip up framework users later.

A consistent and trusted opinionated reviewer

Abhi intentionally hired engineers who move fast, build aggressively, and aren’t afraid to ship. But that also means they’re prone to overlooking defensive programming and edge cases. That’s where CodeRabbit shines.

“It’s like the annoying person on the team that doesn’t want you to move forward because of a thousand things… but they’re actually important, and you’re just being short-sighted by not listening.”

Engineers now resolve every CodeRabbit comment before a merge. No follow-up PRs. No “I’ll fix it later.” As Abhi put it: “CodeRabbit killed the follow-up PR.” While that does mean that it sometimes takes longer to merge an individual PR, it saves time over creating iterative PRs to fix problems in the initial PR. And as many engineering teams know all too well, the mythical “follow-up Pr” rarely happens as people jump to the next ticket, priorities shift and that promised clean-up quietly disappears. Before CodeRabbit, those loose ends would linger and the codebase quality would stay frozen.

A baseline of quality before humans even look

Mastra’s workflow now looks like this:

- Open a PR

- Fix everything CodeRabbit finds

- Merge confidently once all comments are addressed

Abhi summed it up simply: “Once all the CodeRabbit comments are gone and tests are passing, you feel a lot more confident pushing the green button.” Engineers now treat CodeRabbit as the first and most reliable reviewer, removing ambiguity about what “review ready” means.

Results: Cleaner code, fewer regressions & a more confident team

A smoother path to Mastra 1.0

The Mastra team credits CodeRabbit for making the massive 1.0 transition safer and more systematic. ”The help was very valuable; code reviews would undoubtedly have been much harder without it. By surfacing inconsistencies, breaking changes, and leftover cruft from the past year, CodeRabbit ensured the final release landed in a stable place.

An acceptance rate of 70%-85%

Mastra’s team doesn’t skip CodeRabbit’s feedback; they address it all. The numbers prove that engineers accept and resolve 70 to 85% of critical comments before merging any PR.

“We just listen to it as if it’s always correct,” Anhi explained. “And so far it has been.”

There’s no more “I’ll fix it later” or negotiating around feedback. Engineers open PRs, address everything CodeRabbit flags and only merge once comments are resolved.

Teamwide alignment on what quality looks like

Before, some engineers wanted nitpicks removed. Others didn’t. Some reviewed deeply; others shallow. Now, CodeRabbit sets the bar. “CodeRabbit is helpful when we're doing massive changes that impact multiple parts of our framework; that's when the bugs happen.” That’s created more consistency in the Mastra codebase, which makes it easier to work with.

Trust that allows them to let CodeRabbit make changes directly

Engineers trust the tool so much that they will soon automate its use even more. Mastra is already integrating CodeRabbit with their in-house coding agent so that CodeRabbit comments

CodeRabbit = The AI code review tool Mastra’s team trusts

Before CodeRabbit

- •Inconsistent reviews across a 16-person team

- •An AI tool that the team didn't trust or respect

- •Critical bugs slipping through during major releases

- •No baseline for what "review ready" meant

After CodeRabbit

Eliminated follow-up PRs

Catches critical vulnerabilities humans miss (SQL injection, API misuse)

Consistent quality bar across all engineers

Smoother, safer path to Mastra 1.0 release

For Abhi and the Mastra team, CodeRabbit solved what other AI review tools couldn’t: earning genuine trust across the entire engineering team.

CodeRabbit stands out from other tools because it delivers consistent, reliable feedback that engineers actually respect. It goes beyond surface-level reviews to investigate how code works, catches real security risks, and maintains quality standards even when the team is shipping at high volume. This combination ensures that Mastra continues to deliver the reliability their community depends on with an AI reviewer that the entire team finally trusts.

Languages

JavaScript/TypeScript

Challenge

Mastra needed a reliable AI code review tool to maintain consistent quality across their fast-moving codebase during a critical 1.0 release, but their existing tool was inconsistent.